2.1. Nonnegative Least Squares Regression¶

Nonnegative Least Squares Regression solves the equation \(Ax=b\) subject to the constraint that the coefficients \(x\) be nonnegative:

NonnegativeLinear will take in its fit method

arrays X, y and will store the coefficients \(w\) in its

coef_ member:

>>> from mcmodels.regressors import NonnegativeLinear

>>> reg = NonnegativeLinear()

>>> reg.fit ([[0, 0], [1, 1], [2, 2]], [0, 1, 2])

NonnegativeLinear()

>>> reg.coef_

array([1.0, 0.0]

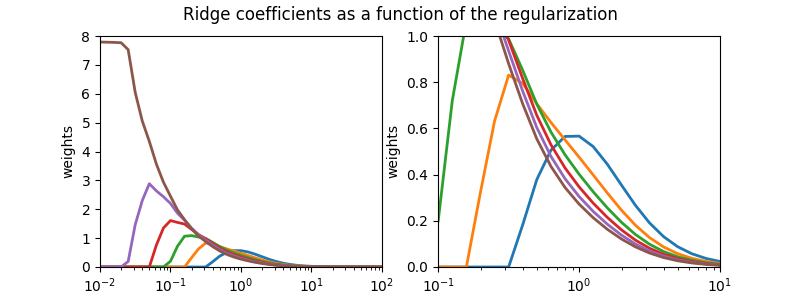

2.1.1. Nonnegative Ridge Regression¶

The equation \(Ax=b\) is said to be ill-conditioned if the columns of A are nearly linearly dependent. Ill-conditioned least squares problems are highly sensitive to random errors and produce estimations with high variance as a result.

We can improve the conditioning of \(Ax=b\) by imposing a penalty on the size of the coefficients \(x\). Using the L2 norm as a measure of size, we arrive at Tikhonov Regularization, also known as ridge regression:

We can incorporate a nonnegativity constraint and rewrite the formula above as a quadratic programming (QP) problem:

where

which we can solve using any number of quadratic programming solvers.

As with NonnegativeLinear, NonnegativeRidge will take in its

fit method arrays X, y and will store the coefficients

\(w\) in its coef_ member:

>>> from mcmodels.regressors import NonnegativeRidge

>>> reg = NonnegativeRidge(alpha=1.0)

>>> reg.fit ([[0, 0], [1, 1], [2, 2]], [0, 1, 2])

NonnegativeRidge(alpha=1.0, solver='SLSQP')

>>> reg.coef_

array([0.45454545, 0.45454545])

2.1.2. Nonnegative Lasso or Nonnegative Elastic Net¶

Both the non-negative Lasso and the non-negative Elastic Net regressors are currently implemented in the scikit-learn package:

- If one wishes to perform non-negative Lasso regression, see sklearn.linear_model.Lasso or sklearn.linear_model.lasso_path and pass the parameters fit_intercept=False, positive=True

- If one wishes to perform non-negative Elastic-Net regression, see sklearn.linear_model.ElasticNet, or sklearn.linear_model.enet_path, and pass the parameters fit_intercept=False, positive=True